How does SVC enable Distributed Caching in MEC?

Suvadip Batabyal, Department of Computer Science and Information Systems, BITS Pilani Hyderabad Campus, India.

1. Abstract

With an ever-increasing demand for the delivery of internet video services, the service providers are facing a huge challenge to deliver ultra-HD (2k/4k) video at sub-second latency. The multi-access edge computing (MEC) platform helps achieve this objective by caching popular content at the edge of a cellular network. This reduces the delivery latency and the load, and the cost of the backhaul links. However, MEC platforms are afflicted by constrained resources in terms of storage and processing capabilities, and centralized caching of contents may nullify the advantage of reduced latency by lowering the offloading probability. Distributed caching at the edge improves the offloading likelihood and dynamically adjusts the load distribution among the MEC servers. In this article, we propose an architecture for the deployment of MEC platforms by exploiting the characteristics of a scalable video encoding technique. The content providers use layered video coding techniques, such as scalable video coding (SVC), to adjust to the network dynamics by dynamically dropping packets to reduce latency. We show how an SVC video easily lends itself to distributed caching at the edge. Then we investigate the latency-storage trade-off by storing the video layers at different parts of the access networks.

2. Introduction

As per the Cisco annual report, nearly two-thirds of the global population will have access to the internet by 2023, with video services consuming the majority of the bandwidth provided by mobile network operators and internet service providers. Globally, devices and connections are growing faster (10% CAGR) than both population (1.0% CAGR) and the internet users (6% CAGR). This increase is putting a tremendous challenge on the service providers to meet the consumer’s requirement for bandwidth, especially for the delivery of ultra-HD or 2k and 4K videos. Additionally, there is an increasing demand for videos with a bit rate (15-18Mbps for 4K videos), augmenting the challenge of best service delivery. With this fact, the mobile network operators (MNOs) and service providers are (i) deploying ultra-dense networks for energy-efficient coverage of urban landscape, (ii) deploying multi-access edge computing (MEC) platforms for low-latency delivery of multimedia contents and reducing the transport cost of the backhaul networks.

2.1 Ultra-dense Networks

Ultra-dense networks (UDNs) [1] are realized through the deployment of small cells, which are access points with small transmission power (and hence coverage). These access points cater to the service requirement of a significantly small percentage of the population. DNS facilitates the reuse of frequency spectrum energy-efficient and improves link quality (bits/hertz/unit area). The small cell access points may be a fully functional base station (picocell or femtocell) or a remote radio head (RRH). The fully functioning BS can perform all the functions of a macrocell with lower power in a smaller coverage area. The small cell BSs (SBSs) may be connected to the macrocell BS (MBS) using wireless or wired backhaul technology. Although wired backhaul can provide a higher data rate, due to the growing number of SBS, wireless backhaul is more cost-effective and scalable.

2.2. Multi-access Edge Computing

MEC is a natural development in the evolution of mobile base stations and the convergence of IT and telecommunications networking. Multi-access Edge Computing will enable new vertical business segments and services for consumers and enterprise customers. It allows the software applications to efficiently tap into local content and obtain real-time information about local-access network conditions. By deploying various services and caching contents at the network edge, mobile core networks are alleviated of further congestion and can efficiently serve local purposes. MEC uses cases include computation offloading, distributed caching and content delivery, enhanced web performance, etc.

The MEC architecture [2] typically consists of a host-level and a system-level manager. The MEC system-level management includes the multi-access edge orchestrator (MEO) as its core component, an overview of the complete MEC system. The MEO has visibility over the resources and capabilities of the entire mobile edge network, including a catalog of available applications.

3. Deployment Architecture

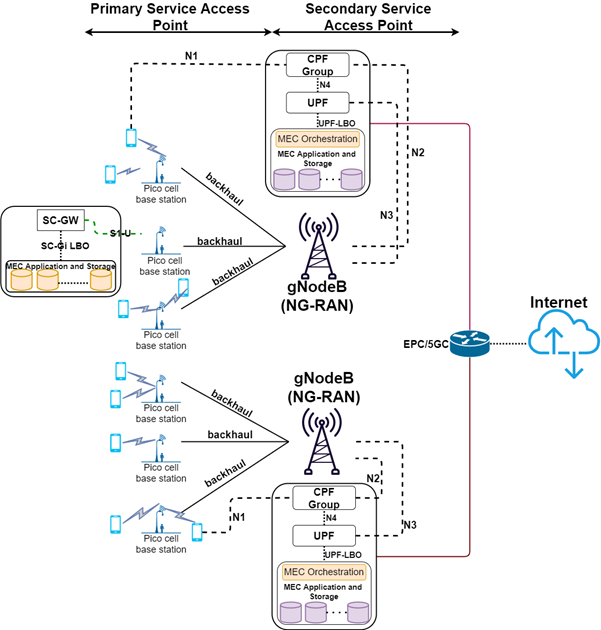

Figure 1: Architecture for SVC-based distributed caching.

Figure 1 shows the proposed deployment architecture. The architecture is divided into two slices (i) primary service access points (P-SAPs) and (ii) secondary service access points (S-SAPs). The P-SAPs consist of the small-cell base stations along with the MEC platform. The S-SAPs consist of the macro-cell base stations and the MEC platform. The user equipment (UEs) are typically attached to the P-SAPs and get serviced by them. The UE gets attached to the S-SAPs only under zero coverage by the P-SAPs. The P-SAPs consist of two main modules, the user plane functionality (UPF) and the MEC platform. The S-SAPs consist of three main modules: the user plane functionality (UPF), the control plane functionality (CPF), and the MEC platform. The UPF in P-SAPs consists of a small cell serving gateway (SC-GW) [3]. SC-GW is primarily responsible for routing traffic to and from the core network to the UEs. It can also take care of handover under mobility from one SBS to another. The SC-GW is attached to the MEC platform using an SC service gateway interface with local breakout (SC-Gi LBO). It may be mentioned here that both the SC-Gi LBO and the MEC application may be hosted as VNFs (virtual network functions) in the same MEC platform. In S-SAPs, the CPF consists of various functionalities such as network exposure functions (NEF), network resource function (NRF), authentication server function (ASF), etc. The UPF is connected to the CPF, bearing the service gateway, through the N4 interface. Like the P-SAP, the UPF is connected to the MEC platform using the S-GW LBO.

The MEC platform (in both P-SAPs and S-SAPs) primarily consists of two components: the MEC orchestrator (MEO) and MEC application and storage. The MEO is responsible for maintaining an overall view of the MEC system based on deployed MEC hosts, available resources, MEC services, topology, etc. The MEC application services may include computation offloading, content delivery, edge video caching, etc. The storage system stores the contents to be delivered to the UE in real-time during a service request.

We exploit the characteristics of the layered coding techniques for distributed caching[4][5] in the proposed architecture. A layered video coding technique, such as scalable video coding (SVC), consists of a base layer with multiple enhancement layers. The number of layers in a video (besides other factors) depends on the desirable video size (or downloadable segment size) for the application and the level of scalability required. The enhancement (or higher) layers are encoded on the base layer (or layer-0). Moreover, each higher layer is encoded to its previous layers. The reception of the stream’s base layer is mandatory to play the video without a stall or a skip, albeit at a lower quality, and also to decode the enhancement layers. With the reception of enhancement layers, the video quality keeps improving. We propose two schemes for caching any video on the MEC platform. Case 1: The base layer of a video is stored at the SBS MEC, and the enhancement layers are stored in MBS MEC. Case 2: The base layer is stored in the MBS MEC, and the enhancement layers are stored in SBS MEC. The above caching decision is decided by the MEO hosted at the secondary service access point and essentially in the gNodeB. The MEO has visibility over the resources and capabilities of the entire mobile edge network, including a catalog of available applications. The MEO (in the current context) decides whether to cache a particular video segment based on the available resources and system performance. This decision is taken based on the storage availability, access profile of the video, and other video characteristics. The SBS first handles requests for access to the video. If available, the user is serviced; this is also notified to the MEO. The MEO handles the requests and decides whether to forward the video to the SBS for storage. The MEO runs daemon processes to track system parameters based on such decisions. We analyze the latency and storage capacity trade-off for the above schemes and determine the factors that affect both schemes’ performance.

4. Trade-off Analysis

We perform a cache capacity to latency trade-off using an SVC video. The video has 20 GOPs where each GOP is of 2 seconds. The SVC is encoded at a constant bit rate of 16Mbps, typically the standard average bit rate for a 2K video with a frame rate of 30Hz. Each video has a base layer and four enhancement layers. The enhancement layers contribute to 20% of the GOP size, the remaining 80% being contributed by the base layer. Therefore, given the settings, the size of a GOP is 32Mb, out of which 25Mb (approximately) is the size of the base layer; and all the enhancement layers (taken together) make up 7Mb.

Next, we assume that the SBS can provide a data rate (depending upon different configurations) varying between 100Mbps to 1000Mbps (although practically, data rates above 500Mbps are still a vision). The MBS can provide a data rate ranging between 10Mbps to 100Mbps. We assume that an MBS has a storage capacity four times that of an SBS. A similar assumption and analysis can be found in [6]. It is to be mentioned here that there exists an asymptotic relation of storage size and download data rate between SBS and MBS. Therefore, any other experimental parameter that adheres to the asymptotic relation will not change the basic inferences.

4.1. Caching Rules

We assume two caching rules, viz.,

1. Rule-1: when the base layer of a video is stored in the SBS cache, and enhancement layers are stored in MBS. If the base layer of a video is found in SBS, then the enhancement layer will be found in MBS. However, the vice-versa is not valid. If the base layer is not found in SBS, then the enhancement layer may or may not be there in the MBS, depending upon the popularity of the video.

2. Rule-2: When a video's base layer is stored in MBS, enhancement layers are stored in SBS. If the base layer is found in MBS, the enhancement layer will be found in SBS and vice-versa.

Several cache replacement strategies have been proposed in this context [7]. We assume a popularity-based cache replacement strategy here.

4.2 Results

(a)

(b)

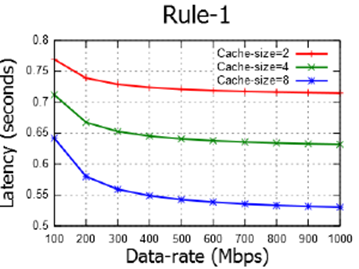

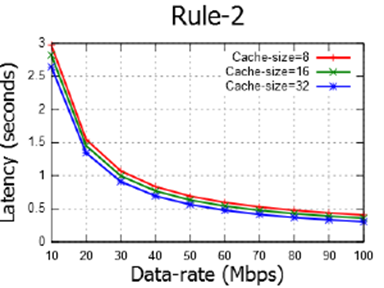

Figure 2: Graph of latency (seconds) against data-rate (Mbps) for (a) Rule-1 (b) Rule-2

Figures 2a and 2b show the latency against data rates for three different cache sizes under rule-1 and rule-2, respectively. It can be observed that:

1. Average latency under rule-1 is significantly smaller compared to rule-2. This is due to the higher data rate in SBS.

2. The rate of decrease in latency is higher under rule-2 than rule-1 as the data rate is improved. This is because of asymptotically higher data rates in SBS than MBS, even for smaller cache hit-ratio.

3. Latency is more negligible in MBS than SBS when the data rate is 100Mbps. This is because of the higher cache hit ratio in MBS.

4. Cache hit-ratio does not play a significant role under asymptotically smaller data rates, viz., in the case of rule-2. This is because of the bottleneck caused due to the lower transmission rate aided by the latency of the backhaul network.

5. Conclusion and Future Directions

We proposed an architecture for distributed caching of an SVC video and performed a latency-storage trade-off. It is envisaged that a higher storage capacity

at MEC incurs a higher cost and requires a higher power supply. Therefore, SBS can support only a small cache size compared to MBS. We find that SBS yields significantly improved latency under higher data rates. On the other hand, MBS can deliver similar latency under a higher hit ratio obtained by larger cache size. In the future, it would be interesting to investigate latency and cost trade-offs, where cost may be calculated as the weighted sum of storage capacity and bandwidth.

References

[1] M. Kamel, W. Hamouda and A. Youssef, “Ultra-Dense Networks: A Survey,” in IEEE Communications Surveys & Tutorials, vol. 18, no. 4, pp. 2522-2545, Fourthquarter 2016, doi: 10.1109/COMST.2016.2571730.

[2] https://www.etsi.org/deliver/etsi_gs/mec/001_099/003/02.01.01_ 60/gs_mec003v020101p.pdf

[3] A. Filali, A. Abouaomar, S. Cherkaoui, A. Kobbane and M. Guizani, “Multi- Access Edge Computing: A Survey,” in IEEE Access, vol. 8, pp. 197017- 197046, 2020.

[4] B. Jedari, G. Premsankar, G. Illahi, M. D. Francesco, A. Mehrabi and A. Yl ̈a-J ̈a ̈aski, “Video Caching, Analytics, and Delivery at the Wireless Edge: A Survey and Future Directions,” in IEEE Communications Surveys & Tutorials, vol. 23, no. 1, pp. 431-471, Firstquarter 2021, doi: 10.1109/COMST.2020.3035427.

[5] T. X. Tran and D. Pompili, “Adaptive Bitrate Video Caching and Processing in Mobile-Edge Computing Networks,” in IEEE Transactions on Mobile Computing, vol. 18, no. 9, pp. 1965-1978, 1 Sept. 2019, doi: 10.1109/TMC.2018.2871147.

[6] S. Zhang, N. Zhang, P. Yang, and X. Shen, “Cost-Effective Cache Deployment in Mobile Heterogeneous Networks,” in IEEE Transactions on Vehicular Technology, vol. 66, no. 12, pp. 11264-11276, Dec. 2017.

[7] X. Jiang, F. R. Yu, T. Song, and V. C. M. Leung, “A Survey on Multi-Access Edge Computing Applied to Video Streaming: Some Research Issues and Challenges,” in IEEE Communications Surveys & Tutorials, vol. 23, no. 2, pp. 871-903, Second quarter 2021.

Bio Suvadip Batabyal completed his Ph.D. from Jadavpur University, India in the year 2016. He is currently working as an Assistant Professor in the department of Computer Science and Information Systems at BITS Pilani Hyderabad Campus. He received the UGC-BSR fellowship during his Ph.D. He received the best Ph.D. forum paper award at IEEE ANTS 2017. He also received the TUBITAK visiting scientist fellowship in 2019 from the scientific and technological research council of Turkey. His current areas of research include QoE aware video streaming, edge computing, and device-to-device communication.

Suvadip Batabyal completed his Ph.D. from Jadavpur University, India in the year 2016. He is currently working as an Assistant Professor in the department of Computer Science and Information Systems at BITS Pilani Hyderabad Campus. He received the UGC-BSR fellowship during his Ph.D. He received the best Ph.D. forum paper award at IEEE ANTS 2017. He also received the TUBITAK visiting scientist fellowship in 2019 from the scientific and technological research council of Turkey. His current areas of research include QoE aware video streaming, edge computing, and device-to-device communication.

Subscribe to Tech Focus

Join our IEEE Future Networks Technical Community and receive IEEE Future NetworksTech Focus delivered to your email.

Article Contributions Welcome

Submit Manuscript via Track Chair

Author guidelines can be found here.

Other Future Networks Publications

IEEE Future Networks Tech Focus Editorial Board

Rod Waterhouse, Editor-in-Chief

Mithun Mukherjee, Managing Editor

Imran Shafique Ansari

Anwer Al-Dulaimi

Stefano Buzzi

Yunlong Cai

Zhi Ning Chen

Panagiotis Demestichas

Ashutosh Dutta

Yang Hao

Gerry Hayes

Chih-Lin I

James Irvine

Meng Lu

Amine Maaref

Thas Nirmalathas

Sen Wang

Shugong Xu

Haijun Zhang

Glaucio Haroldo Silva de Carvalho